- Google's Tensor Processing Units (TPUs) date back to 2013 and are AI-specific chips optimized for matrix math

- Meta is in advanced talks to use Google's TPUs, challenging Nvidia's dominance in AI chip infrastructure

- Nvidia's stock fell due to fears of losing major clients like Meta and Anthropic to Google's TPU platform

Just like the good old days, Google has once again become the buzzword in Silicon Valley, not for its search engine, not for its Chrome browser, not even for Android, YouTube or Gmail. This time it's about AI.

While there has already been enough chatter about Gemini 3.0 and how it is a step ahead in most parameters, perhaps a bigger story revolving around Alphabet this week is a report by The Information of Meta being in advanced talks with Google to use its Tensor Processing Units (TPUs).

The report had a considerable bearing on the Nvidia stock, which tanked in Tuesday's trading session, as investors banked on Google to act as a disruptor in the AI chip race.

Google TPUs In The Fight

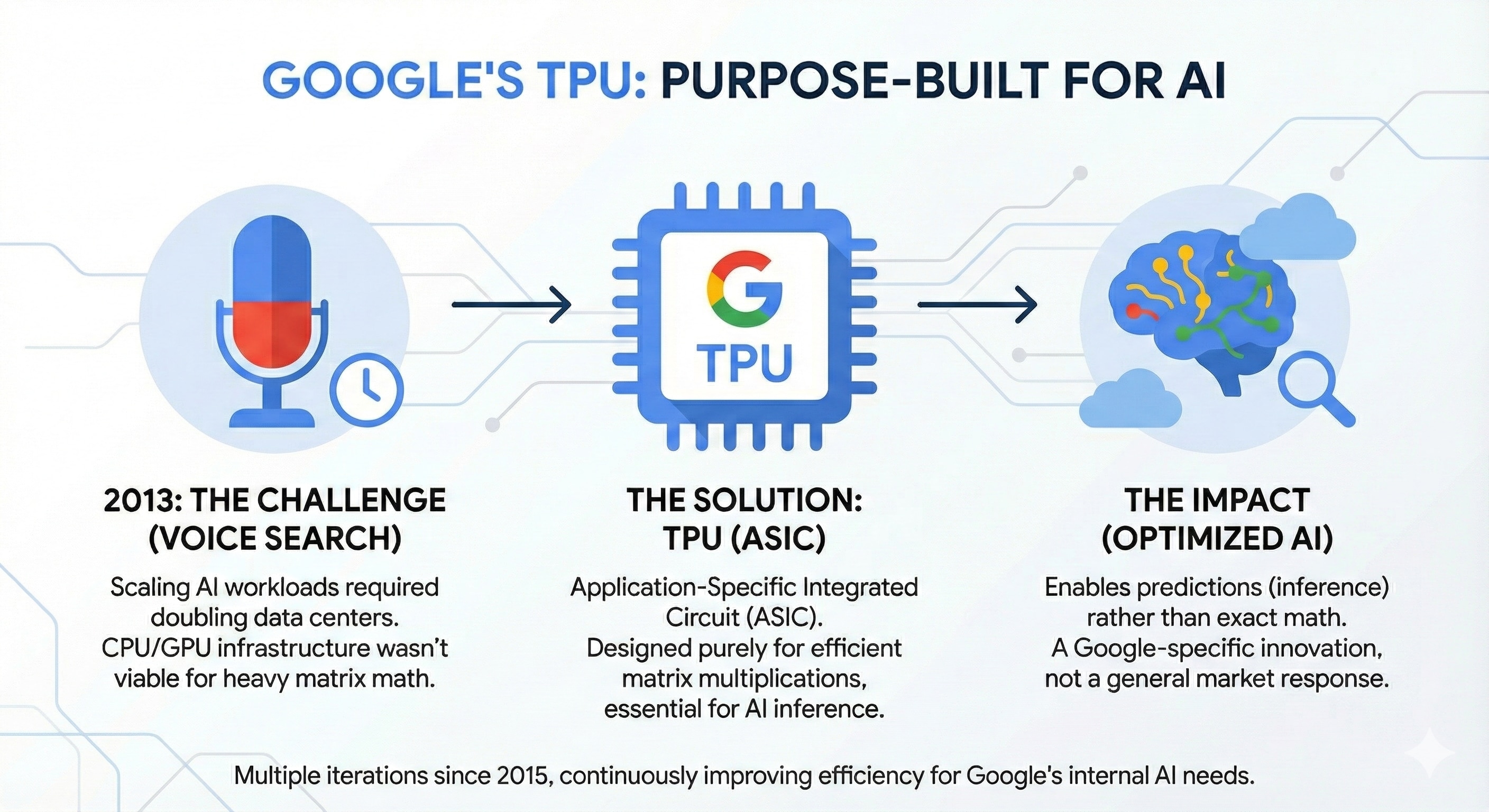

Contrary to popular belief, Google has not recently entered the TPU market. In fact, it has never 'entered' the TPU market, nor were their TPUs a direct response to ChatGPT or Nvidia. TPUs, in general, are a very Google-specific thing and from a computing perspective, it is a chip suited for AI and Machine Learning.

"A TPU is a Tensor Processing Unit, an AI accelerator developed to handle large-scale machine learning workloads efficiently. They are specially optimised to run the tensor-algebra operations at the heart of neural networks," says Ross Maxwell, Global Strategy Lead at VT Markets.

The genesis of Google's TPUs dates back to 2013, when the company realised if every Android user utilised voice search for three minutes a day, it would require Google to double its data centre footprint.

The CPU or even GPU infrastructure back then was simply not viable for such heavy matrix multiplications, thus compelling Google to develop Tensor Processing Units, an application-specific integrated circuit (ASIC) designed to do matrix math - a central requirement for inference, which is a process in which a model can predict rather than do exact math.

Since launching TPUv1 in 2015, Google has dished out multiple versions of the TPU, with the current version, TPUv7, codenamed Ironwood, causing a serious disruption in the market.

Infographic generated by Gemini AI.

Shifting Strategy

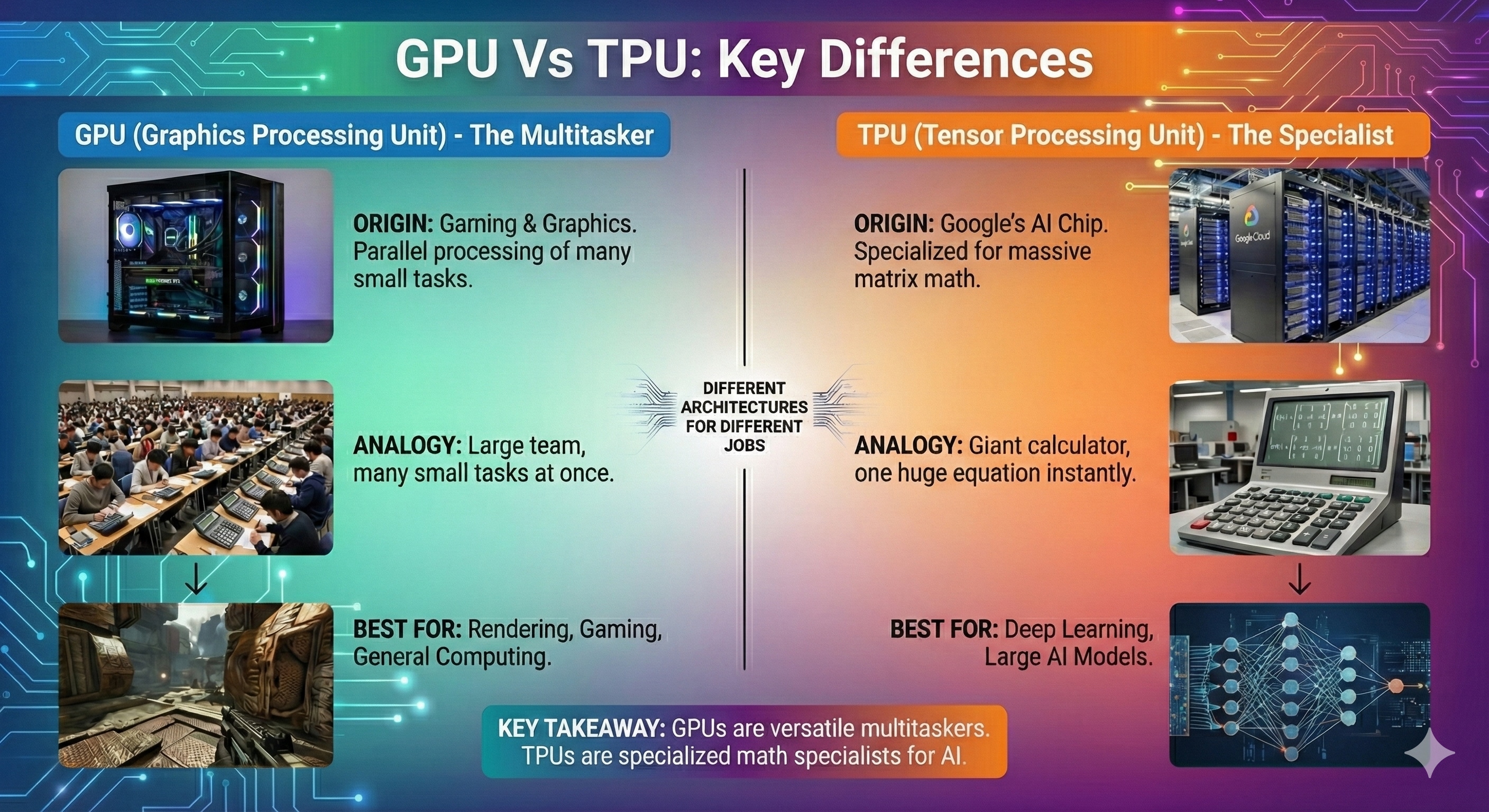

For the longest time, Google had treated TPUs primarily as a competitive advantage for its own services. External access was limited and often required complex software requirements. This is in sharp contrast to Nvidia, whose CUDA platform, coupled with GPU infrastructure, gives more flexibility.

But the rise of Generative AI has brought a significant shift in the way Google sees their TPUs. The company soon began to weaponise their silicon and marketed TPUs as a cost-effective and superior platform for the 'age of inference'.

The deal with Anthropic and the negotiations with Meta are the fruits of this pivot—Google is now operating as a merchant silicon vendor in all but name, leasing its architecture to the world's most demanding AI labs.

Three differences between GPU and TPUs. (Image: Nano Banana Pro)

Understanding Nvidia Stock Fall

Nvidia is reacting to reports of Meta's talks with Google and Anthropic's commitment to a gigawatt-scale TPU infrastructure.

The stock reaction from Nvidia stems from the specific identity of the defectors, as both Meta and Anthropic are not merely just customers but are bellwethers for the entire industry.

Nvidia stock under pressurein the past month. (Image: Nano Banana Pro)

Meta Platforms, for one, has historically been the quintessential Nvidia customer. The training of their Llama model family required the deployment of countless H100 GPUs, which are designed by Nvidia. For the longest time, Meta's infrastructure was viewed as a proxy for Nvidia's long-term health.

However, the revelation that Meta is in advanced talks to procure billions of dollars in Google TPU capacity - initially via cloud rental in 2026 and subsequently through on-premise deployment of TPU silicon by 2027- doesn't bode well for Nvidia.

The case for Anthropic is perhaps even more interesting. The lab founded by former OpenAI engineers is working in an ecosystem built around three different chips - Nvidia's GPUs, Amazon's Trainium and Google's TPUs. This proves that the software friction that had forced companies to get trapped in the Nvidia ecosystem has been overcome.

The decline in Nvidia's stock, therefore, is purely a recognition that its Total Addressable Market (TAM) is being eroded from the top down.

The hyperscalers such as Google, Amazon, Microsoft and Meta account for nearly half of Nvidia's data centre revenue. If these revenue streams successfully transition their internal workloads - either through their own chips or through Google TPUs- Nvidia's business will be seriously impacted.

"The news that Meta Platforms may be in talks with Google to adopt Google's TPUs highlights that Nvidia's dominance is no longer unchallenged," says Maxwell.

"For Nvidia, this isn't an existential crisis, but it is a clear message that the AI-chipmaking ecosystem is evolving fast, and the company will need to stay aggressive and dynamic on performance, pricing, and platform support to retain its lead," he added.

Nvidia Vs Google And Who Is Really Ahead?

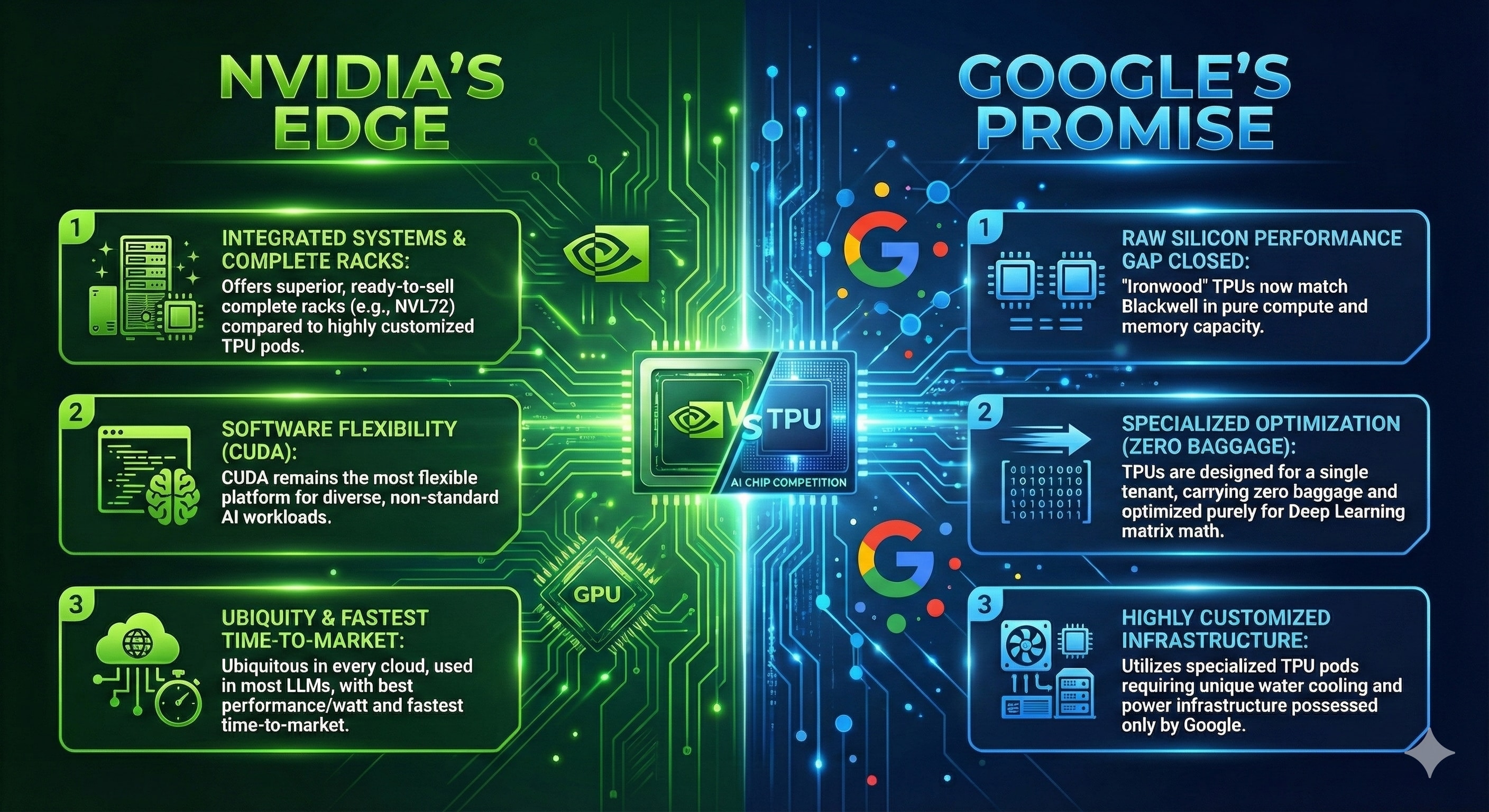

In its statement on Tuesday, Nvidia, while acknowledging Google's advancements in AI, said that the company is a 'generation ahead' of any other AI chip in the market. This is partially true.

Nvidia takes the cake when it comes to integrated systems, as the company has the ability to sell a complete rack (NVL72) and is superior to Google's highly customised TPU pods, which require specialised water cooling and power infrastructure that only Google possesses.

Nvidia is also ahead in terms of software flexibility, with CUDA still being one of the most flexible platforms for non-standard AI workloads.

However, in terms of pure computing prowess, that 'generation gap' in raw silicon performance has closed as Google's Ironwood matches Blackwell in compute and memory capacity.

Put things simply, Nvidia's strength is its biggest weakness. When it designs chips, it must design for everyone - from a biology startup, gaming PC to a hyperscaler. This forces some compromise, meaning GPUs still carry a lot of baggage that minimise its' capacity and potential.

Google's TPUs, in contrast, are designed for a single tenant (Google), carry zero baggage. It is optimised purely for the matrix math of Deep Learning - making things a little bit more tricky for Nvidia as it looks to maintain its dominance in the world of AI.

Nvidia vs Google fight. (Image: Nano Banana Pro)

Bank of America analyst Vivek Arya recently spoke about Alphabet's custom TPU chip, arguing that they may not pose any significant threat to the competitive edge Nvidia has in the market, according to Investing.com.

The analyst added that Nvidia's AI chips are “ubiquitous/available in every cloud and involved in almost every other LLM” and offer “the fastest time-to-market and best performance/watt.”

Essential Business Intelligence, Continuous LIVE TV, Sharp Market Insights, Practical Personal Finance Advice and Latest Stories — On NDTV Profit.