What a week it has been! Artificial intelligence and technology journalists in the United States are currently having the time of their lives having a go at Perplexity, as they should. Nvidia Corp. has a cool new way for large language model developers to get their hands on data, it just might not be human.

Here is some AI news from the past week that's worth a read.

Perplexity's Pesky Plagiarism Problem

Nobody likes their work to be plagiarised. One would think that the last person to plagiarise, would be, in fact, a journalist. Turns out, artificial intelligence doesn't have those qualms.

Enter Perplexity AI.

To understand why every tech journalist sitting in the United States is currently having a gala time writing factually accurate hit pieces on Perplexity, you need to know a little bit about the company's tech and it's Chief Executive Officer Aravind Srinivas.

Perplexity basically works like a search engine, which is backed by various large language models. For example, ask its chatbot something and it'll trawl the internet and give you an answer. Think of it as a mix between ChatGPT and Google's AI Overviews.

The company claims that "users get instant, reliable answers to any question, with complete sources and citations included." Except, not really.

We'll come to the 'why not?' in a bit. Let's set the stage a little bit.

Forbes ran a hard pay-walled story on Google's former CEO Eric Schmidt founding and funding an AI-driven military drone company called White Stork. The magazine had reported on it earlier, which led to Schmidt changing the name and speeding up whatever the company was working on. It's a very good story and I highly recommend reading it if you can spare the time and the dollars.

Here comes the rub.

On June 7, Perplexity published its own story on Schmidt eerily similar to Forbes' article. If that has your plagiarism alarms going off, you're right. Forbes' Chief Content Officer, Randall Lane, released an article on June 11 rightfully calling out the blatant plagiarism. Adding insult to injury is the fact that Perplexity's article has not a single citation to Forbes at all. But that's not all.

According to Lane, Perplexity sent out a mobile phone push notification to its users and even created an AI-generated podcast (once more without crediting Forbes), which became a YouTube video that "outranks all Forbes content on this topic, within Google search."

If you've not caught on yet, Perplexity didn't just outright plagiarise content, it essentially made money off of Forbes' reporting. Lane goes on to write that the incident wasn't the first time that this was happening. Perplexity has done this with CNBC, Bloomberg and several other news outlets in the US.

What makes it even worse for Perplexity is the fact that several tech journalists in the US have sunk their teeth into this story and haven't let go, and its been a delight to read about it.

The Shortcut's Matt Swider went all out on what the actions of the tech company mean for journalism and the industry at large. He painted a grim picture and rightfully so. The way we're developing AI is going to hit news publishers, and its going to hit hard.

What remains to be seen is will bigwig news publishers just sit back and let it happen, like when Google stormed the internet, or will they actually fight for their piece of pie?

Swider puts it best: "Perplexity AI is stealing original journalism content and charging users a $20/mo monthly fee for it"

But perhaps the worst thing to come out of this has probably got to be Perplexity CEO Srinivas' reaction. Not only has the plagiarised content not been taken down, there have been no corrections made to prominently display attribution. What's more is Srinivas has been utterly silent about it, just offering some thanks and a meek excuse saying that it was a "new product feature", which had some "rough edges".

I highly recommend checking out Dhruv Mehrotra and Tim Marchman of Wired. The two have written an amazing piece on all the kinds of not-very-cool-for-an-AI-to-do things that Perplexity does. Give it a read.

It's a rough time to be a journalist in this day and age in any case. AI isn't making it any easier.

Ilya Sutsekver Announces AI Venture

It's barely been a month since Ilya Sutskever left OpenAI and already the computer scientist has a new company. Earlier this week, Sutskever took to social media platform X (formerly Twitter) to announce his new venture: Safer Superintelligence Inc.

The Israeli-American has joined hands with Daniel Gross and Daniel Levy. The former was Apple's AI lead while the latter was a part of the technical staff at OpenAI.

The three have penned an open letter on SSI's X account, stating that their mission is "one goal and one product: Safe superintelligence." They've emphasised that while they do want the company to advance capabilities as fast as possible, they want to make sure that safety is their priority.

"Our singular focus means no distraction by management overhead or product cycles, and our business model means safety, security, and progress are all insulated from short-term commercial pressures," the letter read.

Superintelligence is within reach.

Building safe superintelligence (SSI) is the most important technical problem of our time.

We've started the world's first straight-shot SSI lab, with one goal and one product: a safe superintelligence.

It's called Safe Superintelligence…It's possible this part of the statement was, in some way, a criticism of the way OpenAI, Microsoft Inc. and all the other bigger companies are monetising the technology, without thinking of the safety aspects, something which Sutskever has been quite vocal about in the past.

In an blog post from 2023, written alongside Jan Lieke (who co-led OpenAI's Superalignment team with Sutskever), the former OpenAI staffer claimed that the world was only 10 or so years away from AI that has superior intelligence compared to humans. The two wrote that when the time arrives, the AI doesn't necessarily even need to be benevolent and that humanity needs to take steps to restrict the technology before it gets out of hand.

In fact, last year's drama at OpenAI was partially precisely because of this. Sutskever had voiced concerns about how quickly the company was pushing forward with the technology, without regards for safety. At the end, however, Sutskever reversed his criticisms of OpenAI CEO Sam Altman and ended up being removed from the board.

It's worth pointing out that there were a series of high profile resignations from OpenAI around the same time that Sutskever left, including Jan Lieke and Gretchen Kreuger, all with the same complaints about safety practices within the company.

Currently, SSI has offices in both Paolo Alto, California in the US and Tel Aviv, Israel, where it intends to hire technical talent.

What will be interesting to look forward to is how SSI builds itself up as a for-profit organisation from the ground up. OpenAI tried the non-profit path, but quickly stumbled into a funding problem when it realised the cost of computation power and keeping the lights on. After all, its part of the reason the company is now more or less a for-profit entity.

That being said, judging by the heavyweights who make up SSI, funding might be the last concern.

Nvidia's New AI Platform

Nvidia continues to go from strength to strength. Just days after it became the world's most valuable company, surpassing Microsoft, the company has released Nemotron-4 340B,a "family" of open source models, which developers can use to train large language models.

Nvidia's pitch is this: Given that robust, clean and usable datasets are prohibitively expensive and difficult to access, why not use synthetic data to get the same results?

It sounds pretty great as well. The company has already ensured that Nemotron plays nice with Nvidia NeMo (an open-source framework for end-to-end LLM training) and is optimised for their TensorLLM library, which gives users access to easy-to-use Python application programming interfaces.

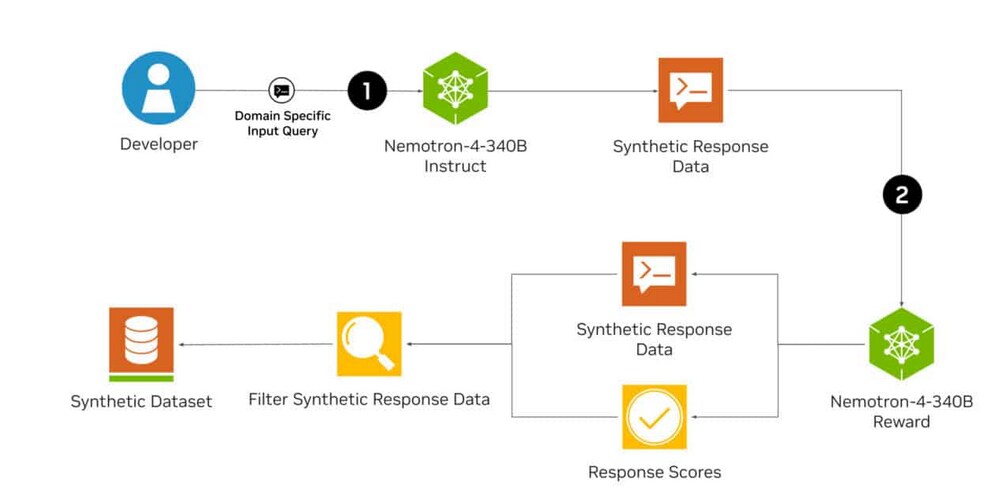

The Nemotron family consists of three models, base, instruct and reward.

Here's how it works:

1) Instruct model generates datasets that resemble real-world data.

2) Reward model inspects the synthetically generated dataset, and grades the the responses within, on five parameters: helpfulness, correctness, coherence, complexity and verbosity.

3) Depending on responses, the reward model provides iterative improvements to tweak and finetune the data.

This ensures that the data is providing accurate results. This data is then used to guide the software for future iterations and make sure the responses are aligned with requirements.

The Nemotron-4 340B models are now available for download via NVIDIA NGC and Hugging Face.

Godfather Of AI Joins UK Startup

Geoffrey Hinton has joined a startup, CuspAI, which has emerged from stealth with a $30-million seed funding round. What does CuspAI do? They're using AI to help users design the next-gen building materials using deep learning and "molecular imulation to streamline the material design process." The British-Canadian is joining the company as a board advisor.

If you're wondering why his name is such a big deal, here's the thing. The name Geoffery Hinton isn't quite uttered in the same hushed tones as the way characters from Mario Puzzo's Godfather call the titular character, but it is just as well-known in AI circles.

CuspAI, founded by Max Welling and Dr. Chad Edwards, is trying to help create AI-designed materials that will allow for carbon capture and storage, which is, in fact, predicted to become a $4 trillion industry by 2050, according to Exxon (Also known as the company that has successfully gaslit millions into believing climate change isn't real. Yes, the irony is palpable).

"I've been very impressed by CuspAI and its mission to accelerate the design process of new materials using AI to curb one of humanity's most urgent challenges—climate change," Hinton said in a press release from the climate tech company.

For someone known as the 'Godfather of AI', Hinton has been a vocal critic of the technology for a while now. He's never been one to shy away from calling AI the harbringer of doom for humanity. It's part of the reason why he quit Google last year.

Here's a quick excerpt from the New York Times, so you have context on his frame of thought:

Dr. Hinton said that when people used to ask him how he could work on technology that was potentially dangerous, he would paraphrase Robert Oppenheimer, who led the US effort to build the atomic bomb: “When you see something that is technically sweet, you go ahead and do it.”

He does not say that anymore.

Yeah...that's kinda depressing right?

But hey, at least him joining CuspAI could mean the AI oldtimer hasn't completely given up on humanity's ability to use the technology for good and make use of it, before it makes use of us.

With inputs from Tejas Kala.

Beyond Tomorrow is a weekly newsletter sent to your inbox every Saturday to give you a roundup of everything AI in the last week.

Essential Business Intelligence, Continuous LIVE TV, Sharp Market Insights, Practical Personal Finance Advice and Latest Stories — On NDTV Profit.